개요

Learning Rate는 동적으로 변경해주는 것이 모델 학습에 유리합니다.

Learning Rate Scheduler는 모델 학습할 때마다 다양하게 적용이 가능합니다.

종류

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.callbacks import LearningRateScheduler

import tensorflow as tf

import numpy as np

def step_decay(epoch):

start = 0.1

drop = 0.5

epochs_drop = 5.0

lr = start * (drop ** np.floor((epoch)/epochs_drop))

return lr

model = Sequential([Dense(10)])

model.compile(optimizer=SGD(), loss='mse')

lr_scheduler = LearningRateScheduler(step_decay, verbose=1)

history = model.fit(np.arange(10).reshape(10, -1), np.zeros(10),

epochs=10, callbacks=[lr_scheduler], verbose=0)

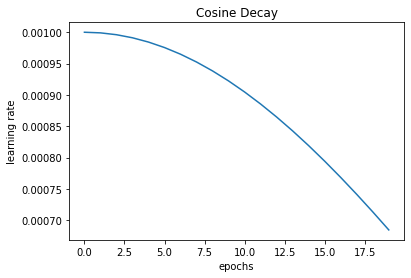

cos_decay = tf.keras.experimental.CosineDecay(initial_learning_rate=0.001, decay_steps=50, alpha=0.001)

model = Sequential([Dense(10)])

# CosineDecay 객체는 optimizer의 lr 인자로 입력이 되어야함

model.compile(optimizer=SGD(cos_decay), loss='mse')

lr_scheduler = LearningRateScheduler(cos_decay, verbose=1)

history = model.fit(np.arange(10).reshape(10, -1), np.zeros(10),

epochs=10, verbose=0)

- initial_learning_rate = 최초 learning rate

- decay_steps = 적용할 steps 횟수

- alpha = 최소 learning rate

- (최소 learning rate = alpha * initial_learning_rate)

cos_decay_ann = tf.keras.experimental.CosineDecayRestarts(initial_learning_rate=0.1, first_decay_steps=10, t_mul=1, m_mul=0.9, alpha=0)

model = Sequential([Dense(10)])

# CosineDecayRestarts 객체는 optimizer의 lr 인자로 입력이 되어야함

model.compile(optimizer=SGD(learning_rate=cos_decay_ann), loss='mse')

history = model.fit(np.arange(10).reshape(10, -1), np.zeros(10),

epochs=10, verbose=0)

- initial_learning_rate = 최초 learning_rate

- first_decay_steps = 최초 decay step 수

- t_mul = 전체 steps수에 얼마나 cosine annealing을 반복할지 결정하는 계수

- m_mul = warm restart시 적용될 초기 learning rate 비율

- alpha = 최소 learning rate

- (최소 learning rate = alpha * initial_learning_rate)

'컴퓨터비전 > CNN' 카테고리의 다른 글

| [딥러닝] 미세 조정(Fine Tuning) (0) | 2022.03.20 |

|---|---|

| [딥러닝] EfficientNet 모델의 개요 및 특징 (0) | 2022.03.20 |

| [딥러닝] ResNet 모델의 개요 및 특징 (0) | 2022.03.20 |

| [딥러닝] GoogLeNet(Inception) 모델의 개요 및 특징 (0) | 2022.03.17 |

| [딥러닝] VGG 모델의 개요 및 특징 (0) | 2022.03.17 |

댓글